Living near a busy road, I have recently taken an interest in air quality. To that end, I have acquired a PMS5003 from Pimoroni to connect to a Pi Zero W that I had been auditioning Pi Core Player on. [efn_note]Pi Core Player is great – especially as I have a venerable [but still working] Slimp3 connected to Logitech Media Server – but it’s a project for another day.[/efn_note]

The PMS5003

The PMS5003 is matchbox-sized and works by means of a fan that draws air over a laser which shines at an optical sensor. Airborne particles interrupt the laser beam and thus the device can count them. I am not sure how it determines the sizes of the particles. If you’re worried about noise, don’t be – the fan is quiet to the point of being inaudible. The PMS5003 is powered from the Pi Zero W so in hardware terms this is a fair simple project to put together.

Pimoroni have written a Python library for the PMS5003 so that seems like the sensible place to start. The examples/all.py script that comes with the library seemed like a good starting point, but by default it outputs values once per second which is far more frequent than I have any use for, so I added a time.sleep statement to make the script pause for 30 seconds after each output:

...

while True:

data = pms5003.read()

print(data)

time.sleep(30)This is what the output of all.py looks like:

PM1.0 ug/m3 (ultrafine particles): 1

PM2.5 ug/m3 (combustion particles, organic compounds, metals): 1

PM10 ug/m3 (dust, pollen, mould spores): 3

PM1.0 ug/m3 (atmos env): 1

PM2.5 ug/m3 (atmos env): 1

PM10 ug/m3 (atmos env): 3

>0.3um in 0.1L air: 318

>0.5um in 0.1L air: 94

>1.0um in 0.1L air: 6

>2.5um in 0.1L air: 4

>5.0um in 0.1L air: 2

>10um in 0.1L air: 1Getting data from Pi to Zabbix

Why Zabbix? It collects time-series and textual data, presents it in different ways and carries out actions when certain thresholds are met. I have extensive experience of Zabbix through using it at work and I already have it installed at home for monitoring my small network, so it seems like a good place to start.

Approach 1 – send values with zabbix_sender

The first approach I tried with Zabbix was to use zabbix_sender to send each value to Zabbix as it arrived from all.py. This consists of ~50 lines of Perl to convert the output of all.py to the form expected by zabbix_sender, and joins the two processes together with pipes. The advantage of this method is that the raw data does not need to be written to disk, which is a worthwhile consideration on an I/O-constrained platform running off a cheap MicroSD card.[efn_note]I could, of course, have skipped the intermediate Perl script if my Python was any good; I spent a good few hours trying to reformat the data with Python but couldn’t get past the problem of the data structure from the PMS5003 library not being “iterable”. I eventually concluded that I’m only doing this For Fun and I wasn’t particularly enjoying myself.[/efn_note] If zabbix_sender loses contact with the server, or pms5003-all.py dies, the whole thing aborts. I use a systemd unit file to keep the script running, but I found that systemd was preventing pmsprocessor.pl from exiting when either child dies, in contrast to what happens when running pmsprocessor.pl interactively. After throwing out this question to the collective genius of the #a&a IRC channel, TC dug up the IgnoreSIGPIPE option in systemd, which defaults to true. When set to false in the unit file, it all now behaves as expected!

Approach 2 – read values from a logfile

The second approach I tried is to write the output of all.py to a log file, via the ‘ts’ command from moreutils. The Zabbix agent then reads this log file and sends interesting values to the server. Outputting the values every 30s results in about 2MB/day of data. The advantage to this approach is that it simplifies the pipeline – our data source and sink operate independently of each other and don’t need to know what state the other is in. The disadvantage is the additional I/O as mentioned above, and now I also have a growing log file to manage somehow. It’s not enough just to truncate the file; you have to stop the process, clear it down and restart it.

Handling data, server-side

I created nine Zabbix items to handle the 12 parameters output by all.py. What about the other three? The (atmos env) versions always appear to be the same as the first three parameters, so I am not bothering to store them separately. The zabbix_sender items are “trapper” type, and they match the arbitrarily chosen item names in pmsprocessor.pl.

The output

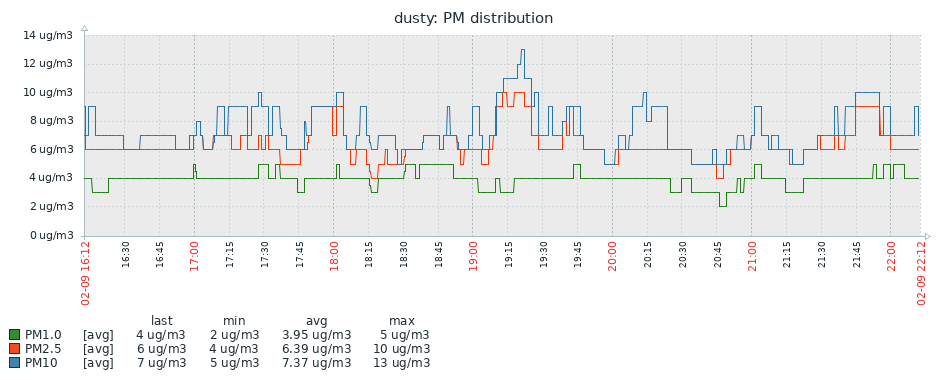

Here are six hours of data, as plotted by Zabbix: